ChatGPT and the Lack of Trust: a Guide to the Responsible Use of Trusted AI in Companies

The release of the AI application ChatGPT is currently dominating the public debate on the capabilities and impact of artificial intelligence. With its impressive human language processing capabilities and versatility in application areas, ChatGPT is currently second to none. The hype around the technology is therefore justified, but in many areas it overlooks the fact that with the current architecture it will not be a solution for many application areas and business needs. The reasons for this lie less in the limitations of the technology than in the governance and regulation of its use (Trusted AI).

An indispensable guide for anyone responsible for the responsible use of AI in companies.

The Limits of Technology

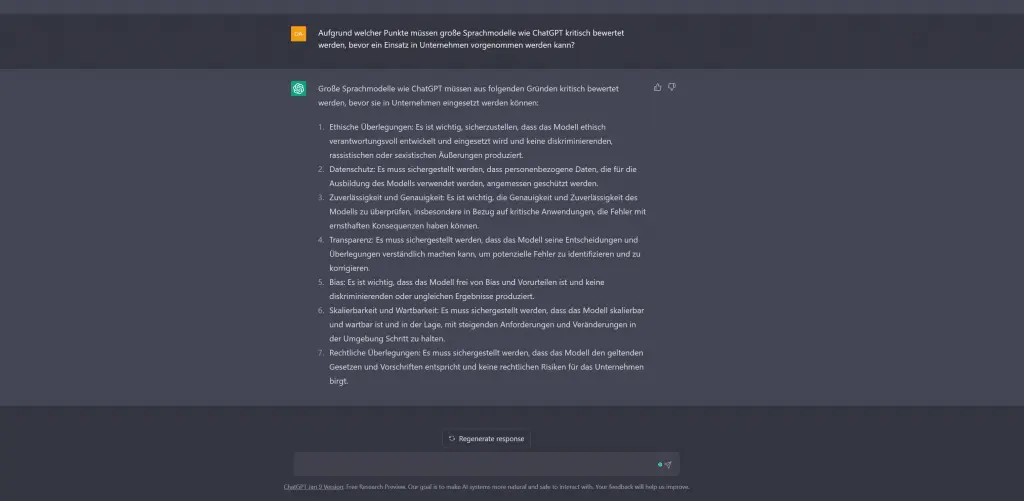

The cloud service offered by OpenAI with ChatGPT must be critically evaluated on the basis of the following points before it is used in companies:

Trust

The great capabilities of the machine conceal the fact that the results generated can only be trusted to a limited extent. The lack of verifiability of the results due to a possible bias, coupled with a lack of transparency, make it difficult to use in the company. There is a lack of trust in AI. But first things first:

The trustworthiness of the generated results is limited due to the lack of verifiability. In contrast to a search engine such as Google, which provides answers from specific sources of information, ChatGPT generates answers synthesized on the basis of learned world knowledge. ChatGPT does not provide sources or similar information that would make a result directly verifiable. Consequently, there is no way to determine what data the AI was trained on, whether that data is representative, up-to-date, or even valid.

Like all AI models, ChatGPT reproduces a bias in the training data that can lead to inaccurate or unfair results. This means that ChatGPT can unintentionally incorporate bias or unfairness into its responses. This can be caused, for example, by underrepresented groups in the training data, resulting in certain perspectives or experiences not being taken into account. If companies using AI systems cannot control and view the database on which the models used were trained, they cannot be aware of the bias present and evaluate the results critically accordingly.

The lack of verifiability is closely linked to another important decision criterion for the use of AI:

Transparency

Transparency is a decisive factor for the use of AI systems such as ChatGPT. However, the decision-making processes of such AI models and the exact model operations are often difficult to understand. In this context, such large cloud systems are often compared to a black box. Users input a query and receive an output, but the decision-making process remains opaque. However, it remains completely unclear how this result was achieved. This is particularly problematic when it comes to evaluating or interpreting the results of AI models. A lack of traceability and transparency can mean that models are not trustworthy and the results cannot be used with confidence. It is therefore important to be able to trace the creative and synergetic performance back to the original training data in order to understand and evaluate model decisions.

A study by PwC confirms this criticism: according to the study, almost all companies surveyed attach great importance to security, transparency (including in the selection of training data) and confidentiality when using AI models. These requirements for the use of artificial intelligence in companies can hardly be met by large language models such as ChatGPT.

Reproducibility and Reliability

Another major challenge with ChatGPT is the lack of reproducibility and reliability in its outputs. Large language models are continuously evolving based on vast datasets, making them highly “creative.” However, this adaptability also makes it difficult to predict and replicate responses consistently. This can lead to problems, especially in applications that require precise and reliable results.

Imagine an insurance company that uses a large language model like ChatGPT to automatically perform the risk assessment of insurance applications. However, if the model is constantly learning and the operations cannot be reproduced, it can happen that it makes a different assessment for each application or that these are not comprehensible.

This poses a major problem, as insurance companies depend on a reliable and reproducible assessment in order to be able to make fair and consistent decisions. Uncertainty and unpredictability can quickly lead to wrong decisions and injustice.

As long as AI systems like ChatGPT function as opaque black boxes, they cannot be effectively integrated into critical business processes.

Confidentiality and Data Protection

The use of ChatGPT poses a further high risk for companies, as both the transparency of the processing of company data and compliance with the provisions of the GDPR are problematic. It is not transparent where and how the AI stores new information and whether this is then considered “intellectual property”. In addition, the use of ChatGPT in many operational application scenarios is contrary to the principles of the General Data Protection Regulation, potentially leading to compliance violations and legal consequences.

The governance and regulation of the use of AI plays an important role in the discussion about the limitations and boundaries of AI systems such as ChatGPT. In Germany, there are already various efforts to have AI systems certified. One example is the European Commission’s“Trustworthy AI” initiative, which focuses on creating a framework for ethical and responsible applications of AI systems. There are other initiatives from PwC and Deloitte as well as from associations such as the VDE, which focus on creating (ethical) guidelines and standards for the use of AI in companies.

Do Ethical AI Certifications Guarantee a Responsible Approach?

However, it is questionable whether such certifications are actually able to ensure the ethical and responsible use of AI systems. There are still many uncertainties and challenges to overcome when it comes to using AI systems in a way that is in the interests and welfare of all stakeholders.

Overall, it is important to regulate the use of AI systems in such a way that both the interests of developers and users as well as the interests of society as a whole are taken into account. While certifications such as“Trustworthy AI“,“Ethical AI” and“AI Trust Label“, or self-evaluation catalogs such as ALTAI or AIC4 can be a step in the right direction, it is also important to critically question whether these certifications are actually able to solve the problems and challenges associated with the use of AI systems. It is important that developers, users and regulators work together on solutions to ensure that AI systems are used ethically and responsibly and make a positive contribution to society.

In which Use Cases is a Different Approach to Artificial Intelligence Required?

AI Integration in Corporate Environments

Compliance, data protection and, in many areas, regulation (particularly in the healthcare and financial sectors) require the use of AI under the control of the company using it. From a technological perspective, this requires the option of using AI on-premise or in a hybrid cloud architecture. The latter enables, for example, the use of “Trusted AI” to anonymize personal data or filter out confidential information for subsequent reuse in a public cloud infrastructure such as Microsoft Azure.

Integration into a corporate environment is not just a technical issue. The use of internal company data for training and controlling AI is just as crucial. AI is not only used at the interface for interaction with people, but also for the automated analysis and processing of data. To ensure AI models are contextually relevant, businesses must continuously provide them with company-specific data.

Compliance and Data Protection are the Main Challenges when Implementing AI

The use of AI in companies is often associated with compliance requirements, data protection regulations and regulatory requirements (particularly in the healthcare and financial sectors). In order to meet these requirements, it is necessary for AI systems to be operated under the control of the respective company. From a technological perspective, this requires the ability to operate AI either on-premise or in a hybrid cloud architecture.

In addition to the technical integration of AI into the corporate context, it is of great importance that internal company data is used for the training and control of AI. AI systems are not only used to interact with people, but also to automatically analyze and process data. In order to adapt AI to the specific corporate context, it is therefore essential to provide it with a wide range of company data on a regular basis.

The lack of trust, reliability, traceability and reproducibility is a general problem with cloud-based AI services, including ChatGPT. However, many AI models developed in companies also suffer from this lack. The reason for this is the lack of processes, procedures and applications that make it possible to create transparency and traceability based on one’s own data and to make not only the possibilities of AI but also its limiting factors visible and systematically controllable.

Deliberately Complementary Architecture of AI Software

For this reason, we have deliberately designed CONTEXTSUITE to complement conventional approaches. With an end-to-end processing pipeline and visual analysis tools, it supports the systematic end-to-end engineering of AI models from data preparation to tools for evaluating the reliability of AI models and agile productive use. This is the only way to implement reliable data-driven business and use it productively!

Conclusion

ChatGPT and subsequent solutions will disruptively change many areas of application and industries. This applies to all areas in which creative processes are to be automated on the basis of non-confidential or otherwise sensitive data. This applies in particular to applications in the fields of communication, marketing and the development of concepts and source code.

The use of specialized, reliably controllable and transparent AI is necessary in all areas of application in which companies either work with sensitive data or are dependent on reproducibility, reliability or the possibility of standardization and contextualization with regard to their own data world when using AI. This applies to both the automation of processes and the AI-supported preparation of data products.

Project manager

Andreas studied Technology & Media Communication and is primarily responsible for internal and external communication and documentation within the company. This gives him an optimal overview of the various technologies, applications and customers of MORESOPHY.

More articles from Responsible AI

|

|

|

|